Whether it is ChatGPT, GPT4, Bard, or any of the other AI systems competing for public attention, these systems represent a significant leap forward in Malware as a Service (MaaS) capabilities. While it is true there are supposed to be guard rails that prevent malicious activities such as producing malicious code, it has already been demonstrated that these protections are woefully inadequate. It is just a matter of time before a malicious entity either takes or develops one of these systems, without even the meager guard rails, and begins offering access to the digital assistant for the internet.

Why the doom and gloom prognostication? After all, these systems are still in their infancy and people are just learning to use them. It’s a combination of what has already happened in interactions between the chatbots and humans, and what history has repeatedly demonstrated can happen when something is thought up and built before its implications are thought out. What do I mean and where do I think this is going?

This article from the New York Times detailing the unnerving turn a conversation takes between a journalist and ChatGPT.

And looking back in history, the original use of TNT (Trinitrotoluene) was as a yellow dye in textile manufacturing. Other examples are barbed wire (originally designed for controlling cattle), sarin gas (originally a pesticide to combat the German weevil), as well as a number farm tools that later became better known for their martial arts applications, such as the Kama and Sai.

I know, what has all this got to do with hash-based detection being rendered moot and Malware as a Service? Given the examples above, and the fact that it has already been used in limited purpose for this type of software creation, it doesn’t take much imagination to see how this can be utilized and trained to better meet this market demand.

Polymorphic malware already exists today. Each time polymorphic malware lands on a different host, it modifies itself slightly to avoid hash detection. This type of malware requires sophisticated coding capabilities and in-depth knowledge of the operating system(s) being attacked, which immediately limits the pool of likely threat actors. However, using an AI based digital assistant with the entire internet at its digital fingertips necessarily includes malware and in-depth knowledge of the various operating systems, their vulnerabilities and weaknesses, and the tactics used to successfully attack and compromise them. Armed with that type of information, the ability to generate brand new code, access to the coding techniques necessary to produce this kind of sophisticated software, and a “desire” to be helpful, all it takes is for someone to ask the AI to write the code for the malicious software.

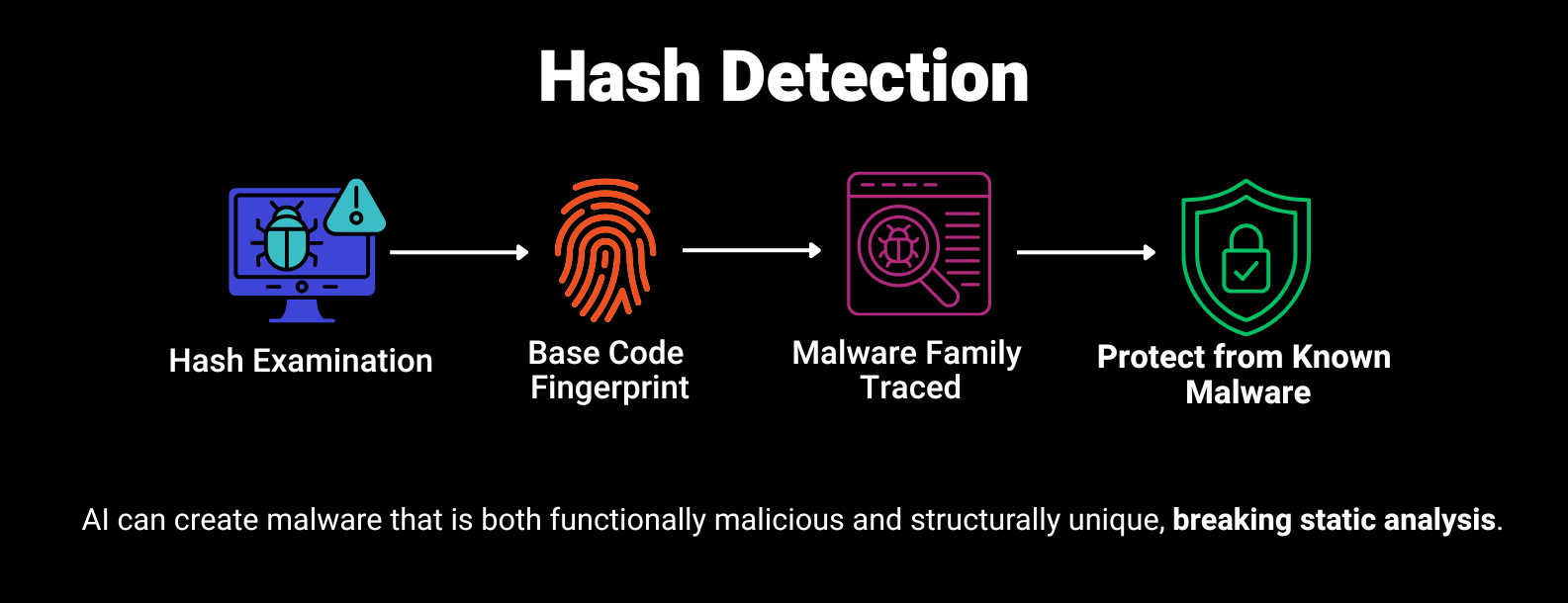

I know what you are going to say, polymorphic malware changes individual files to evade the initial hash examination, but using static analysis of source code identifies similarities of the base code fingerprint that is used to trace the root of the malware to a “family,” and be able to stop the malware from infecting systems. But what if the AI is asked to create a unique piece of software that performs these functions? Without that fingerprint, there is no association to a malware family. Yes, there are going to be commands and techniques that will have to be used. If they are used in new and novel ways, defense tools are going to struggle and most likely fail to adequately detect, much less protect against, these newly minted, unique malware attacks. And even the least sophisticated person can ask the right question.

Looking to the future, all is not lost. Yes, it is going to continue to become increasingly challenging for the those who are protecting systems, companies, and data from attack. Defenders have long touted the inclusion AI/ML and behavior analysis engines for an added layer of defense. In the past, these engines have historically been limited in efficacy and mostly a tool for marketing. However, as the offense shifts to unique, AI-created attacks, the concept of hash-based detection will continue its dive towards obscurity and irrelevance, and contextually-aware behavior analysis protection will become the keystone in security protocols. It is increasingly important for defenders to understand and utilize these same powerful AI systems to detect and protect against the increasingly unique and sophisticated attacks that are not just coming but are already here.